Since the second half of 2014 a new dedicated SAP HANA Quick Sizer tool has been available which covers SAP Suite on HANA including the CO-PA Accelerator option.

Also see SAP Note 1793345 Sizing for SAP Suite on HANA.

The mechanics of using the QuickSizer for CO-PA Accelerator are very easy.

1) Start the QuickSizer

2) Input the Customer No.

3) Provide a Project Name

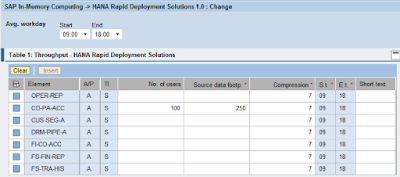

4) Select SAP In-Memory Computing

5) Select HANA Rapid Deployment Solutions

6) Locate the row element for CO-PA-ACC

7) Input the Number of CO-PA Users, the Source Data Footprint in GB, and the estimated Compression factor (7 is the default)

8) Click on the Calculate Results button

Screen print from Step 7...

Also see SAP Note 1793345 Sizing for SAP Suite on HANA.

===================================================================

THE INFORMATION BELOW IS NOW OUT OF DATE

===================================================================

The SAP Quick Sizer tool is available now for SAP HANA and even includes content for the CO-PA Accelerator.The mechanics of using the QuickSizer for CO-PA Accelerator are very easy.

1) Start the QuickSizer

2) Input the Customer No.

3) Provide a Project Name

4) Select SAP In-Memory Computing

5) Select HANA Rapid Deployment Solutions

6) Locate the row element for CO-PA-ACC

7) Input the Number of CO-PA Users, the Source Data Footprint in GB, and the estimated Compression factor (7 is the default)

8) Click on the Calculate Results button

Screen print from Step 7...

What is meant by number of users? It is not the number of concurrent users or the number of named users,

rather the user concept is explained in SAP Note 1514966 which is extracted here.

---------------------------------------------------------------------------------------------------

To estimate the maximum number of active users (i.e. users which

cause any kind of activity on the server within the time period

of one hour) that can be handled by a HANA server, we have chosen

the following approach.

... we assume that HANA queries can be divided into three categories

("easy", "medium", "heavy"), which differ in the amount of CPU

resources that they require. Typically, "medium" queries use twice

as much resources as "easy" ones, while "heavy" queries require ten

times as much resourses.

Furthermore, we assume that HANA users can be devided into three

categories ("sporadic", "normal", "expert"). The user categories

are defined by the frequency of query execution and the mix of

queries from different categories. "Sporadic" users typically

execute one query per hour, and run 80% "easy" queries and 20%

"medium" queries; "normal" users execute 11 queries per hour,

and run 50% "easy" queries and 50% "medium" queries; and "expert"

users execute 33 queries per hour, and run 100% "heavy" queries.

------------------------------------------------------------------------------------------------------

What is the source data footprint and how is it captured?

-------------------------------------------------------------------------------------------------------

Memory sizing of HANA is determined by the amount of data that

is to be stored in memory, i.e. the amount of disk space

covered by the corresponding database tables, excluding their

associated indexes. Note that if the database supports

compression, the space of the uncompressed data is needed.

-------------------------------------------------------------------------------------------------------

The SAP note above further provides instruction on how to obtain the uncompressed table sizes

using a script or stored procedure. The method required will differ by source database but one of

the inputs to the script is to identify which ERP tables should be selected.

So how do you go about determining which CO-PA tables go into the input for the source data footprint?

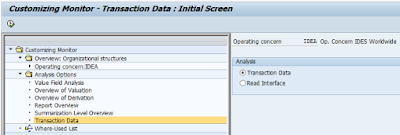

A short cut method for determining the source data footprint is to use transaction KECM or

report RKE_ANALYSE_COPA.

Click on the item "Transaction Data" from the menu.

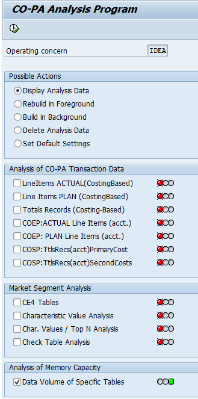

Tick the bottom item "Data Volume of Specific Tables" and then select the radio button

"Rebuild in Foreground". Execute the report. After you build the analysis, you can return to this

transaction and select the radio button "Display Analysis Data" before clicking Execute.

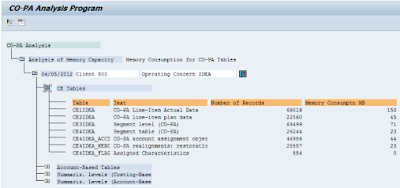

Upon execution, the report output will display record counts and memory consumption in MB

for each CO-PA table. For the CO-PA Accelerator we are only interested in the CE1, CE2, and CE4

tables being replicated into SAP HANA. However if BusinessObjects reporting is to be utilized other

tables may be required to be replicated into SAP HANA depending on the specific reporting requirements.

The memory consumption figures are calculated as a theoretical size by multiplying the number

of actual records in the table by the ABAP table length and converting to MB. However actual table

size in the database will be less because many CO-PA fields will be blank. Nevertheless this is an easy

and quick way to get a worst case uncompressed sizing for CO-PA. Just divide the Memory Consumption

numbers shown above by 1024 to input into the QuickSizer in GB.

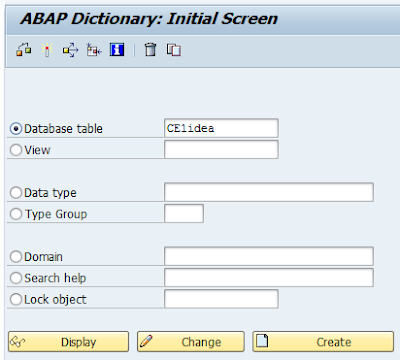

To understand further the Memory Consumption figures shown above, use transaction SE11 to display

a CO-PA table.

Then select from the menu Extras --> Table Width...

The Width of Table popup screen is displayed.

As proof to the Memory Consumption figure above, multiply the number of records in the

table x the ABAP Length:

69,019 x 2,274 = 156,949,206 bytes which divided by (1024)^2 coverts to 150 MB rounded

as shown in KECM.

If your CO-PA Accelerator scenario will involve BusinessObject reporting then you will use SLT

to replicate not only the CO-PA transaction data but also the related master data and text tables

into SAP HANA. You might want to expand the source data footprint to include these additional

table sizes in the QuickSizer tool. (However our early customer feedback indicates the master

data and text tables have little influence on HANA sizing.)

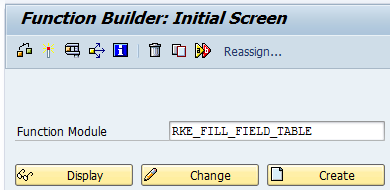

Nevertheless, in order to identify the CO-PA relate tables you can execute function module

RKE_FILL_FIELD_TABLE from transaction SE37.

After pressing F8 or clicking on the Test/Execute icon you will input the Operating Concern

for field ERKRS and input '1' in the PA_TYPE field for costing-based CO-PA.

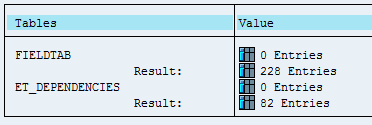

Press F8 or click Execute again and you will see the Table results.

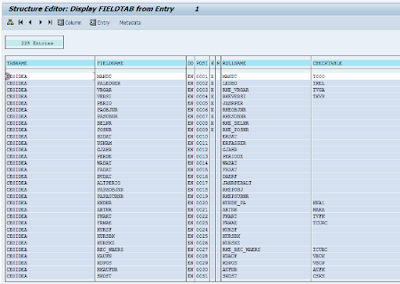

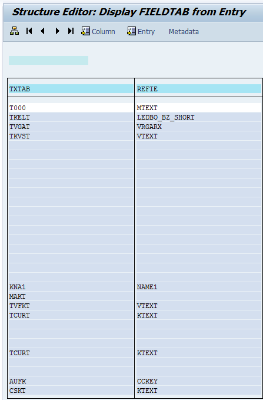

Click on the Details View/Edit icon View Icon.png next to the 228 Entries under FIELDTAB.

You will need to take note of all of the master data tables listed under CHECKTABLE.

Scroll to the right and also take note of all of the text tables listed under TXTAB.

To get the source data footprint for these tables you can use the script mentioned in SAP Note 151496.

Alternatively uou could calculate the Memory Consumption manually for each table using the method

shown above, where you would obtain record counts using transaction SE16 for each table.

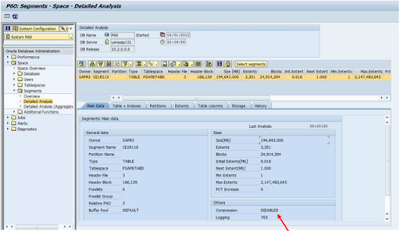

One final option, you can also view table sizes from transaction DB02 to obtain the source data footprint.

But there are a few things to watch out for if using DB02. First, only include the Data size and not the

ndex size in the QuickSizer tool. Also be sure to convert from KB to GB for the QuickSizer.

You do not want to use DB02 however if database compression is active on the table, because this will not give

you the correct (uncompressed) value to input into the SAP HANA QuickSizer.

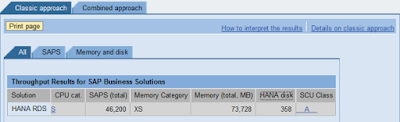

Coming back to the QuickSizer tool, here is an example of the output it generates based on 100 users and

250 GB of source data.

1. CPU category - S (Small)

2. SAPS (total) - 46,200

3. Memory category - XS (eXtra Small)

4. Memory (MB) - 73,728

5. HANA disk GB - 358

6. SCU Class - A

The above information should be sufficient data for you to have discussions with a hardware provider

on what size HANA appliance may be required for implementing the CO-PA Accelerator.

Finally, you may need to adjust the source data footprint input value if your organization expects growth in the

CO-PA tables due additional periods of data retention, or due to to ERP roll outs to new business units or if

you seek to move archived CO-PA data into SAP HANA.

Source: scn.sap.com

Hello many thanks

ReplyDeletehow to find out which users are "easy", "medium", "heavy" ?

Many Thanks